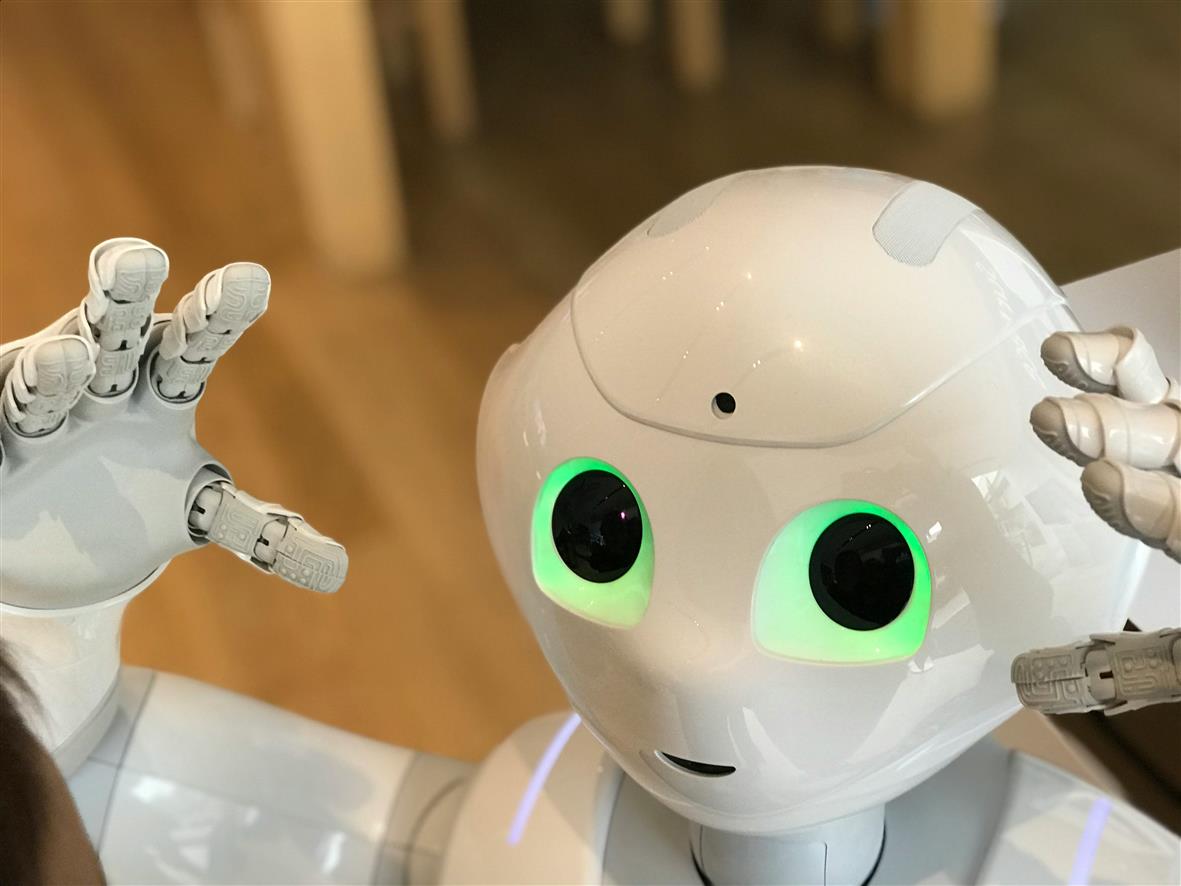

For centuries, understanding emotions was a uniquely human skill. We read each other’s faces, tones, silences. We guessed, misread, reinterpreted. Now, machines are stepping into this deeply human territory—not just identifying what we say, but how we feel when we say it.

Emotion recognition technology (ERT) is no longer confined to sci-fi movies or academic labs. It’s here, increasingly integrated into apps, cars, classrooms, job interviews, and even law enforcement tools. Cameras track micro-expressions. Algorithms dissect vocal tone. Wearables monitor pulse, sweat, and eye movement. The goal? Decode our inner state with a degree of consistency and scale that humans can’t always match.

But the rise of this emotionally aware tech raises complicated questions. If machines can read feelings better than people, what do we gain—and what do we lose?

From Facial Twitches to Algorithmic Labels

Emotion recognition systems rely on a mix of facial analysis, voice processing, physiological signals, and behavioral cues. Using these inputs, AI models map responses to categories such as anger, joy, fear, sadness, or boredom. Some systems claim to go even deeper—detecting sarcasm, stress, or deception.

Tech giants, startups, and governments are all exploring the possibilities. Cars that slow down when they detect driver fatigue. Education tools that adapt lessons based on a student’s frustration levels. Corporate platforms that assess emotional tone during video meetings. All of it is happening now, not ten years from now.

The push is partly economic—emotions drive consumer behavior, after all—but also cultural. As people spend more time interacting with screens than humans, companies are racing to humanize those interactions. Emotion-aware machines promise a bridge: understanding without judgment, feedback without fatigue.

But beneath the surface lies a messier reality.

The Promise (and Problem) of Precision

At first glance, machines might seem like perfect emotional readers. They don’t get distracted. They don’t project their own biases (at least not consciously). They don’t get tired.

But humans are not always predictable. A smile can be a mask. Tears can signal relief, not sadness. Culture, personality, context—all influence emotional expression. Even when humans struggle to interpret each other, we have social experience and empathy to navigate the grey zones. Machines? Not so much.

That hasn’t stopped companies from making big claims. Some platforms boast 90%+ accuracy in detecting basic emotions. Others integrate real-time emotion tracking into customer service or hiring tools. But critics argue that many of these systems are built on flawed assumptions—like the idea that specific emotions always correlate to specific facial expressions.

Worse still, the training data often reflects narrow cultural and racial representations, which can lead to biased results. An emotion recognition tool trained mostly on Western faces, for example, may misread expressions from other backgrounds entirely.

So while the tech is advancing, the confidence in its accuracy—especially across diverse populations—is far from solid.

Emotional Surveillance or Empathetic Tools?

Emotion recognition technology lives in a grey space between utility and intrusion.

On one hand, the potential benefits are real. Mental health apps could flag early signs of depression based on tone and facial expression. Elder care systems might alert caregivers if a senior shows distress. Public safety tools could detect agitation in a crowded venue before violence breaks out.

In these contexts, emotional insights offer care and prevention, not control.

But what happens when the same technology is used to monitor employee productivity, detect lies during job interviews, or rank job applicants based on their facial “confidence”?

That’s already happening. Some companies have piloted systems that analyze facial and vocal cues during Zoom interviews to flag desirable personality traits. Others monitor call center staff for emotional tone in real time. In schools, cameras assess student engagement, silently scoring them on attention and emotion.

It doesn’t take much imagination to see how these systems could cross ethical lines—especially if decisions are made based on emotional “scores” that subjects can’t contest or even see.

Emotion as Data: Who Owns It?

A smile. A blink. A sigh. In the world of ERT, all of it becomes data—collected, stored, analyzed.

But unlike other types of data, emotional cues are deeply personal. They come from the body, not just behavior. And that raises a unique set of privacy concerns.

Who has the right to track our emotions? Is consent meaningful if it’s buried in a terms-of-service agreement? Can you truly opt out if emotional recognition becomes embedded in public spaces or essential services?

These questions remain largely unanswered. There are no global standards for emotional data, and few jurisdictions explicitly regulate it. While the EU’s GDPR includes emotional data as a special category of sensitive information, enforcement is still murky. In most of the world, your emotions—once captured by a device—belong to whoever collects them.

Human Connection in an Automated Age

There’s also a more existential concern. If machines become better at recognizing our emotions than other people, what happens to human connection?

Do we come to rely on our devices to interpret our moods? Will we outsource emotional labor to apps? Does empathy lose something when it’s reduced to a reading, a chart, a notification?

Emotion recognition promises efficiency. But emotional experience isn’t meant to be efficient. It’s awkward, fluid, contradictory. A friend might miss the signs you’re upset, but they can hug you. A spouse might misread your silence, but they sit with it.

Machines can mimic understanding. They can process signals. But they don’t feel anything. And at some level, we still seem to need that shared vulnerability.

Where Do We Go From Here?

Emotion recognition is not going away. If anything, it will become more common, more accurate, and more embedded in the systems around us. The question is whether it serves us—or begins to shape us.

To move forward responsibly, we’ll need:

- Transparency from companies about how emotional data is used, stored, and shared.

- Regulation that treats emotional data as a sensitive category, subject to stricter consent and control.

- Cultural sensitivity in how systems are trained and applied across different populations.

- Ethical boundaries, especially in high-stakes areas like employment, policing, and education.

And perhaps most of all, we need to ask: what does it mean to be understood? If emotion is reduced to data, do we gain clarity or lose complexity?

Emotion recognition may one day help us understand each other better. But it can never replace the real work of empathy. That still belongs to us.

4o