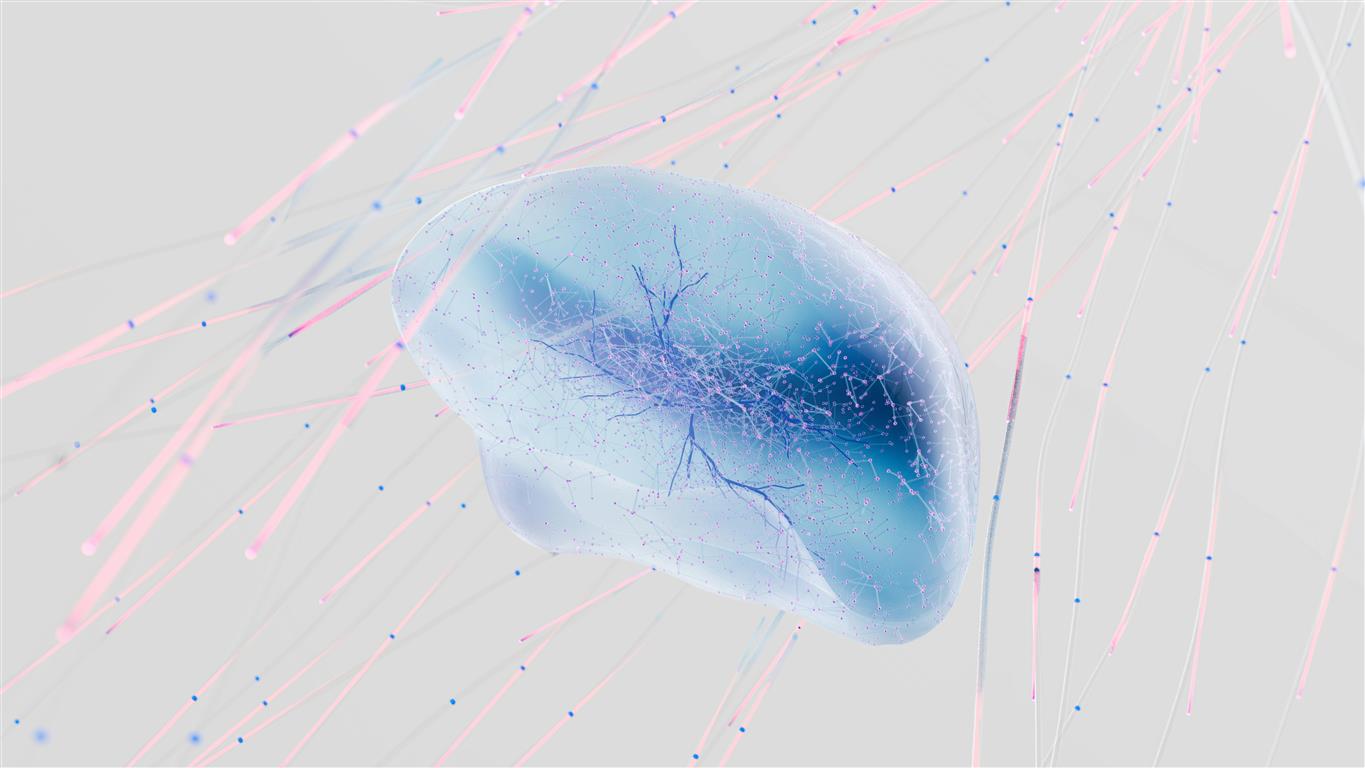

In labs and startups across the world, scientists and engineers are building tools that can listen to the brain—not just in theory, but in real time. Headbands that monitor attention levels, implants that restore movement to paralyzed limbs, AI models that interpret internal speech—all are part of a rapidly advancing field: neurotechnology.

What was once the realm of science fiction has become a bustling frontier of neuroscience, computer science, and ethics. As the ability to read and interpret brain signals grows more precise, so do the questions it raises. Chief among them: Who owns the data derived from your brain? And can you ever meaningfully consent to giving it away?

From Brainwaves to Behavior

The term “neurotechnology” covers a range of tools and devices that interact with the nervous system—whether to record signals, stimulate neurons, or decode intention. Some of these tools are invasive, like brain-computer interface (BCI) implants. Others are non-invasive: EEG headsets, functional MRI scans, or transcranial stimulation.

Initially developed for medical use—helping patients with ALS communicate, restoring hearing via cochlear implants, or treating depression through neural stimulation—these technologies are now edging toward broader applications. Consumer-grade devices claim to boost focus, monitor stress, or enhance meditation. Meanwhile, researchers are working on BCIs that can translate neural activity into speech for people unable to speak, or control robotic limbs through thought.

But as these systems become more capable, the line between assistance and intrusion becomes harder to draw.

Decoding Thought: A New Kind of Data

Unlike typing habits, location history, or even biometric fingerprints, brain data hits closer to the self. It can capture emotion, intention, even pre-conscious reaction. In some experiments, researchers have reconstructed images people were viewing, or translated imagined speech from fMRI patterns with startling accuracy.

That level of interpretation brings us to a new kind of personal data—not just what you do, but what you think.

It also introduces a new category of risk. If companies, governments, or malicious actors can access your neural patterns, what stops them from misinterpreting or manipulating that information? And how can you ever prove what you were—or weren’t—thinking?

The issue isn’t just privacy. It’s agency.

The Consent Problem

Informed consent is the backbone of ethical research and medicine. But when it comes to neurotech, consent becomes murkier. Can a person really grasp what they’re agreeing to when they allow a company to access their brain data?

Most consumer EEG devices, for instance, come with terms of service—dense legal documents that bury complex data-sharing clauses. The average user has no clear idea how their brain signals are stored, analyzed, or monetized. And unlike passwords, neural signals can’t be changed.

Moreover, brain data is inferential by nature. It doesn’t say something as straightforward as “X person visited Y website.” Instead, it’s interpreted through algorithms that look for patterns, correlations, and responses. These interpretations can be wrong—or biased. Yet they can still be stored, sold, or used to train machine learning models.

In short: neurotechnology gathers data that people may not fully understand, from a system they can’t easily control, to draw conclusions they may never see. That’s not just a consent issue—it’s a power imbalance.

Who Owns the Mind?

Ownership of brain data is largely uncharted legal territory. In many countries, there are data protection laws for health records, GPS location, and biometric info. But brain data—especially from consumer neurotech—is often treated as just another data stream.

That might not be enough.

Some ethicists argue that neural data should be protected under a new category: cognitive liberty. This concept frames the mind as sovereign territory—off-limits to surveillance, manipulation, or unauthorized decoding. It extends the right to privacy into the internal landscape of thought.

In 2017, researchers Rafael Yuste and Sara Goering proposed a framework for “neurorights”—basic ethical principles to protect individuals from exploitation via neurotechnology. These include rights to mental privacy, identity, agency, and equal access. Chile became the first country in the world to enshrine some of these into law in 2021, amending its constitution to safeguard “the physical and mental integrity” of individuals in the face of neurotechnology.

Other nations are watching, but few have acted. For now, the companies building these tools are left to self-regulate—and history suggests that’s a shaky proposition.

Beyond Medical Use

Medical applications of neurotech tend to exist within stricter ethical guardrails. Clinical trials, physician oversight, and institutional review boards provide layers of protection. But outside the hospital, the rules are looser.

Several companies now offer “neuro-enhancement” products marketed to athletes, gamers, students, and professionals. Some promise better memory, faster focus, or emotional regulation. The tech behind them is often still in development, and the marketing stretches the science. But the data they gather is real—and frequently uploaded to the cloud.

It’s not hard to imagine a future where employers ask workers to wear focus-monitoring headsets, or schools track students’ attention during lessons. What starts as voluntary may become expected. And the line between helping people and monitoring them grows thinner with each update.

The Role of Big Tech

Tech giants have already dipped into the brain-computer interface space. Meta (Facebook) announced a project to develop a wrist-worn neural interface. Elon Musk’s Neuralink seeks to implant chips in human brains. These efforts have generated both excitement and fear—particularly around what could happen if personal thoughts became another data stream to harvest, profile, and commodify.

Even if the current capabilities are limited, the intent is clear: the brain is the next data frontier.

And once tech companies enter that frontier, questions around consent and ownership will only intensify.

Cultural and Psychological Impact

Beyond the legal and ethical debates lies a subtler effect: how neurotech may change the way we think about thinking.

If a headset tells you you’re not focused, do you believe it? If an app says your brain is underperforming, do you feel less capable? What happens to self-trust when your own internal state is measured, analyzed, and scored?

There’s a psychological cost to constant cognitive measurement. It may lead to more self-awareness—but also to more self-doubt, external validation, and dependency on tools that claim to know your mind better than you do.

And once brain data becomes normalized, opting out might come with social or professional costs.

Toward a Framework of Mindful Technology

Neurotechnology holds extraordinary promise. It can help people with disabilities communicate. It may one day aid mental health diagnosis or recovery. But without clear ethical frameworks and public understanding, it risks becoming another tool for surveillance, control, or commercial exploitation.

To avoid that future, we need to develop principles that match the stakes. These could include:

- Right to mental privacy: Brain data should be considered intimate and protected accordingly.

- Transparent consent: Users should understand what’s collected, how it’s interpreted, and what it’s used for.

- Auditability: Neural algorithms must be explainable and open to scrutiny.

- Agency: People must retain control over their mental outputs—not just now, but in perpetuity.

- Limits on use: Especially in employment, education, or law enforcement, the use of neurotech should be clearly regulated.

As a society, we must ask not just what neurotechnology can do, but what it should do—and who gets to decide.

Final Thoughts

The brain is not just another interface. It’s the core of our identity, memory, emotion, and experience. As neurotech edges closer to decoding thought itself, the need for consent, transparency, and legal protection becomes urgent.

We cannot afford to treat mental data like another tech commodity. Because once the inside of your head becomes legible to machines, the question of ownership stops being academic. It becomes personal. Deeply personal.

And in that moment, perhaps more than any before, the right to think freely must remain our own.